QuakeCity – Synthetic dataset of physics-based earthquake damaged buildings in cities

References:

- V. Hoskere, “Developing autonomy in structural inspections through computer vision and graphics,” University of Illinois at Urbana-Champaign, 2021.

- V. Hoskere, Y. Narazaki, B. F. Spencer Jr., Physics-Based Graphics Models in 3D Synthetic Environments as Autonomous Vision-Based Inspection Testbeds. Sensors 2022, 22, 532. https://doi.org/10.3390/s22020532

- B. F. Spencer, V. Hoskere, and Y. Narazaki, “Advances in Computer Vision-Based Civil Infrastructure Inspection and Monitoring,” Engineering, vol. 5, no. 2. Elsevier Ltd, pp. 199–222, 01-Apr-2019.

- V. Hoskere, Y. Narazaki, B. F. Spencer Jr., Learning to detect important visual changes for structural inspections using Physics-based graphics models, 9th International Conference on Structural Health Monitoring of Intelligent Infrastructure, St. Louis, MO

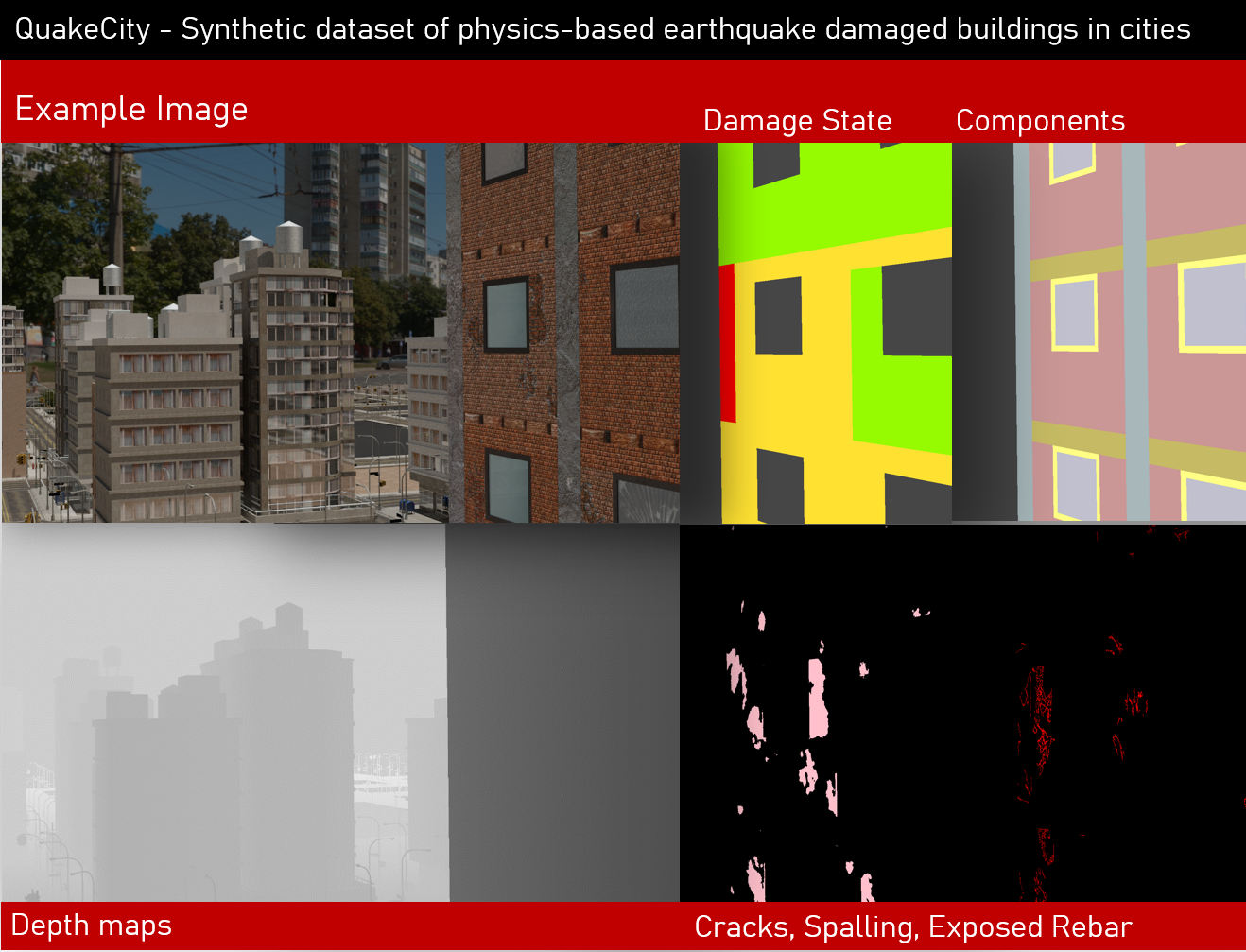

The QuakeCity dataset is produced through the use of physics-based graphics models (PBGM) proposed by Hoskere et al. 2021. Here surface damage textures are applied to the photo-realistic model of the structure based on a global and local finite element analysis of the building structure. The QuakeCity dataset was released as part of IC-SHM for project task 1. Images are rendered from multiple simulated UAV surveys of damaged buildings in a city environment. Each image is associated with six different sets of annotations including three damage masks (cracks, spalling, exposed rebar), components, component damage states, and a depth map.

QuakeCity Annotations

- Damage subtasks: Annotations for semantic segmentation (pixel-level predictions) with three

- Cracks

- Exposed rebar

- Spalling

- Component task: Component annotations including wall, columns, beams, and windows, window frames, and slabs.

- Component damage state task: Component damage states include no damage, light, moderate, and severe or NA.

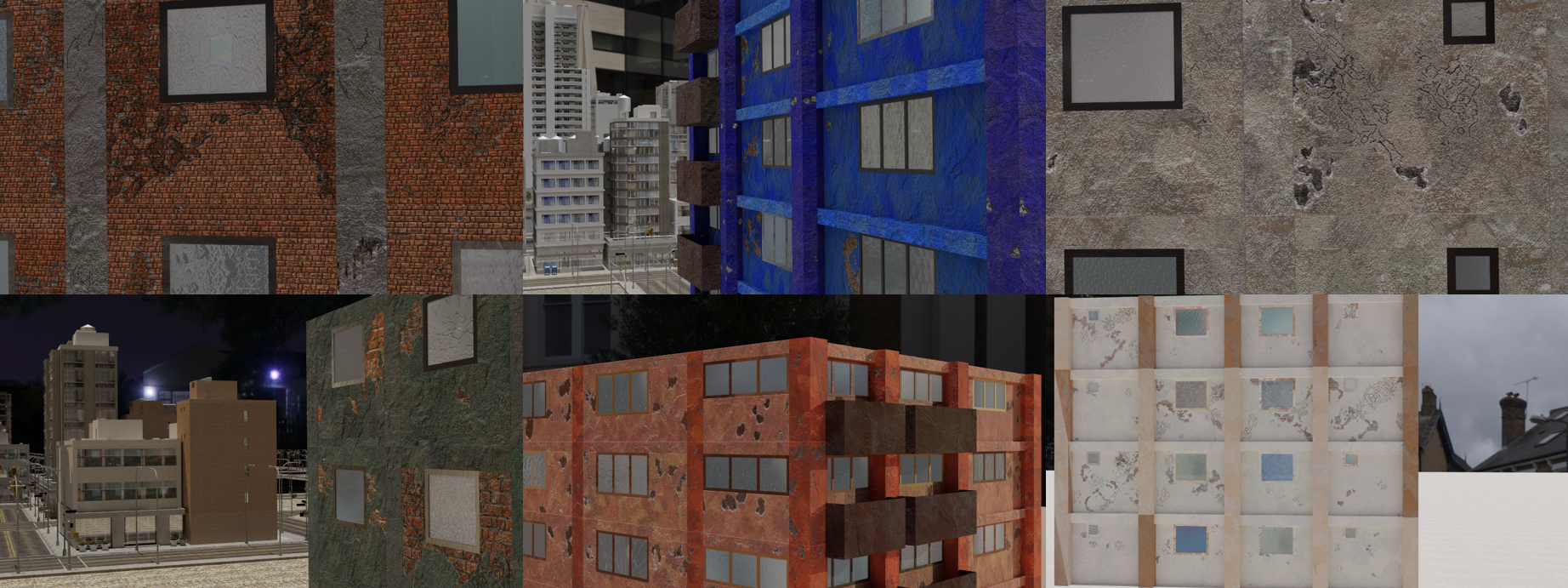

QuakeCity Features

- Physics-based damage

- Parametric buildings with different layouts, paint colors, dimensions, height

- Different lighting scenarios (day time, dusk, night time with electric lighting)

- Realistic UAV camera path with oblique and orthogonal views

- Varying camera distance (close-ups, zoomed out views)

- Varying damage levels

- 4,809 images and 6 annotations per image of size 1920×1080

Background

Visual inspections are widely utilized to assess the condition of civil infrastructure in the aftermath of earthquakes. While careful manual inspections can be time-consuming and laborious, the use of computer vision can help automate several inspection subtasks such as the data acquisition, data processing and decision making. Many researchers have looked to build datasets and deep learning models to better understand the potential of computer vision in achieving the data processing task. The combination of component identification and damage identification can also be used to determine the damage states of components which are important factors towards overall decision making. Additionally, when utilizing robotic data acquisition methods, such as UAVs, a vast number of images can be acquired with little effort. A key challenge for an optimal system is not only determining how to best utilize the acquired data, but also how to acquire data that is most useful. Considering the choice of the robot path together with methods for processing of the data, can lead to more efficient systems that improve reliability of visual inspections.

With field data acquisition, evaluation of the inspection process comprehensively (i.e., data acquisition, data processing, and decision making together) is challenging. This project aims at developing and implementing computer vision-based damage state estimation of civil infrastructure components with the help of synthetic environments. A dataset of annotated images, termed QuakeCity, is generated from multiple simulated UAV surveys of earthquake damaged buildings.

Download

Download the dataset here!

Please cite:

- V. Hoskere, “Developing autonomy in structural inspections through computer vision and graphics,” University of Illinois at Urbana-Champaign, 2021.

- V. Hoskere, Y. Narazaki, B. F. Spencer Jr., Physics-Based Graphics Models in 3D Synthetic Environments as Autonomous Vision-Based Inspection Testbeds. Sensors 2022, 22, 532. https://doi.org/10.3390/s22020532

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. To learn more about this license, visit http://creativecommons.org/licenses/by-nc-sa/4.0 (C) Vedhus Hoskere