EIGAN – Unpaired Image-to-Image Translation of Structural Damage

Paper

Abstract

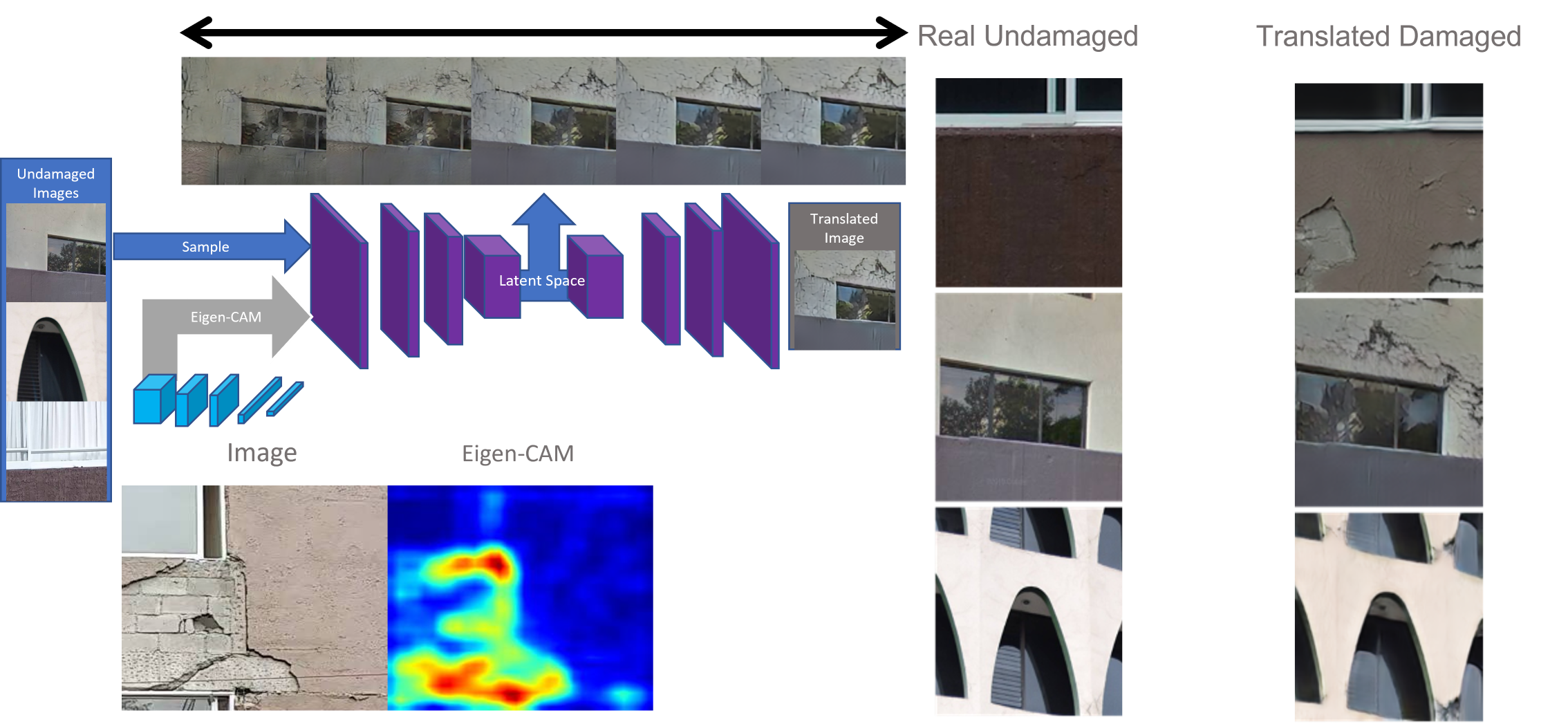

Condition assessment of civil infrastructure from manual inspections can be time consuming, subjective, and unsafe. Advances in computer vision and Deep Neural Networks (DNNs) provide methods for automating important condition assessment tasks such as damage and context identification. One critical challenge towards the training of robust and generalizable DNNs for damage identification is the difficulty in obtaining large and diverse datasets. To maximally leverage available data, researchers have investigated using synthetic images of damaged structures from Generative Adversarial Networks (GANs) for data augmentation. However, GANs are limited in the diversity of data they can produce as they are only able to interpolate between samples of damaged structures in a dataset. Unpaired image-to-image translation using Cycle Consistent Adversarial Networks (CCAN) provide one means of extending the diversity and control in generated images, but have not been investigated for applications in condition assessment. We present EIGAN, a novel CCAN architecture for generating realistic synthetic images of a damaged structure, given an image of its undamaged state. EIGAN has the capability to translate undamaged images to damaged representations and vice-versa while retaining the geometric structure of the infrastructure (e.g, building shape, layout, color, size etc). We create a new unpaired dataset of damaged and undamaged building images taken after the 2017 Puebla Earthquake. Using this dataset, we demonstrate how EIGAN is able to address shortcomings of three other established CCAN architectures specifically for damage translation with both qualitative and quantitative measures. Additionally, we introduce a new methodology to explore the latent space of EIGAN allowing for some control over the properties of the generated damage (e.g., the damage severity). The results demonstrate that unpaired image-to-image translation of undamaged to damaged structures is an effective means of data augmentation to improve network performance.

Please cite:

- Varghese, S., and V. Hoskere. 2023. “Unpaired image-to-image translation of structural damage.” Advanced Engineering Informatics, 56: 101940. Elsevier. https://doi.org/10.1016/J.AEI.2023.101940.

- Varghese, S., R. Wang, and V. Hoskere. 2022. “Image to Image Translation of Structural Damage Using Generative Adversarial Networks.” Structural Health Monitoring 2021: Enabling Next Generation SHM for Cyber-Physical Systems – DEStech Publishing Inc., S. Farhangdoust, A. Guemes, and F.-K. Chang, eds., 610–619.